Cloudflare went down. The internet followed. Your business cannot.

On 18 November 2025 a single provider glitch took a big slice of the internet offline.

Cloudflare stumbled and with it X, ChatGPT, McDonalds, ecom, SaaS, games, media.

Real client result.

We saw the first errors. Within 30 minutes every client of ours was back online and serving traffic again. No panic. No all night war room. Just a Plan B doing exactly what it was built to do.

At the same time thousands of companies watched their sites die in real time. Trading platforms alone saw massive lost volume while users stared at error pages instead of placing orders.

Multiply that by ecom, lead forms, paid traffic, live events. The total bill will sit in the billions.

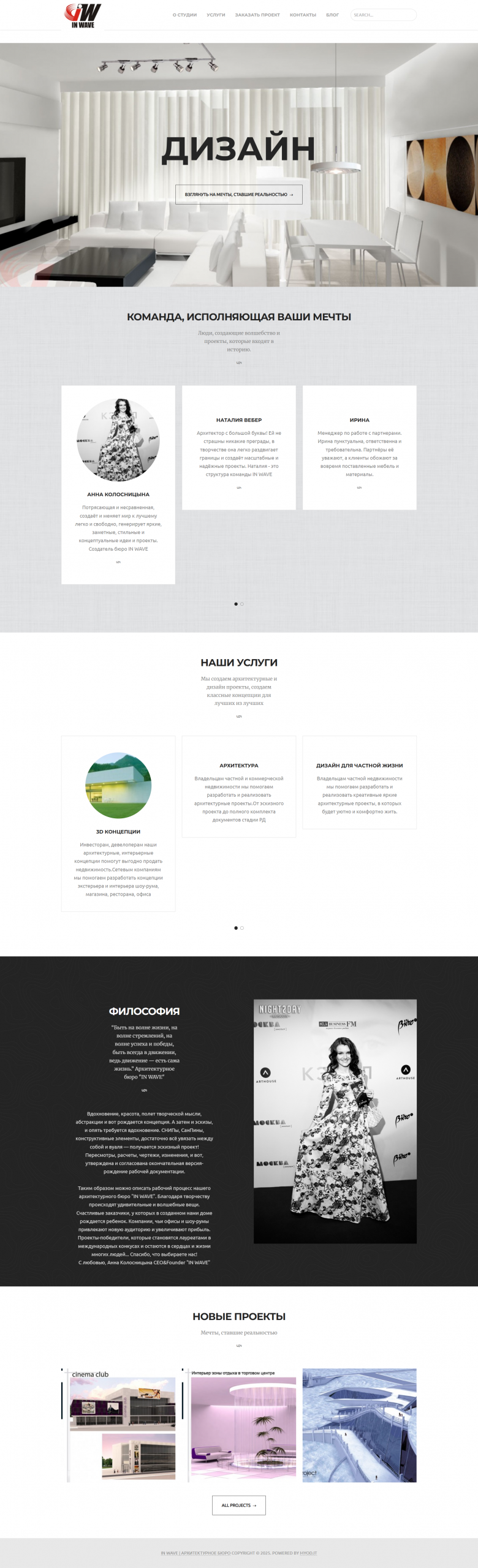

This is not a story about Cloudflare being bad.

This is a story about what happens when your entire revenue stream stands on one leg.

What actually happened

Very short version.

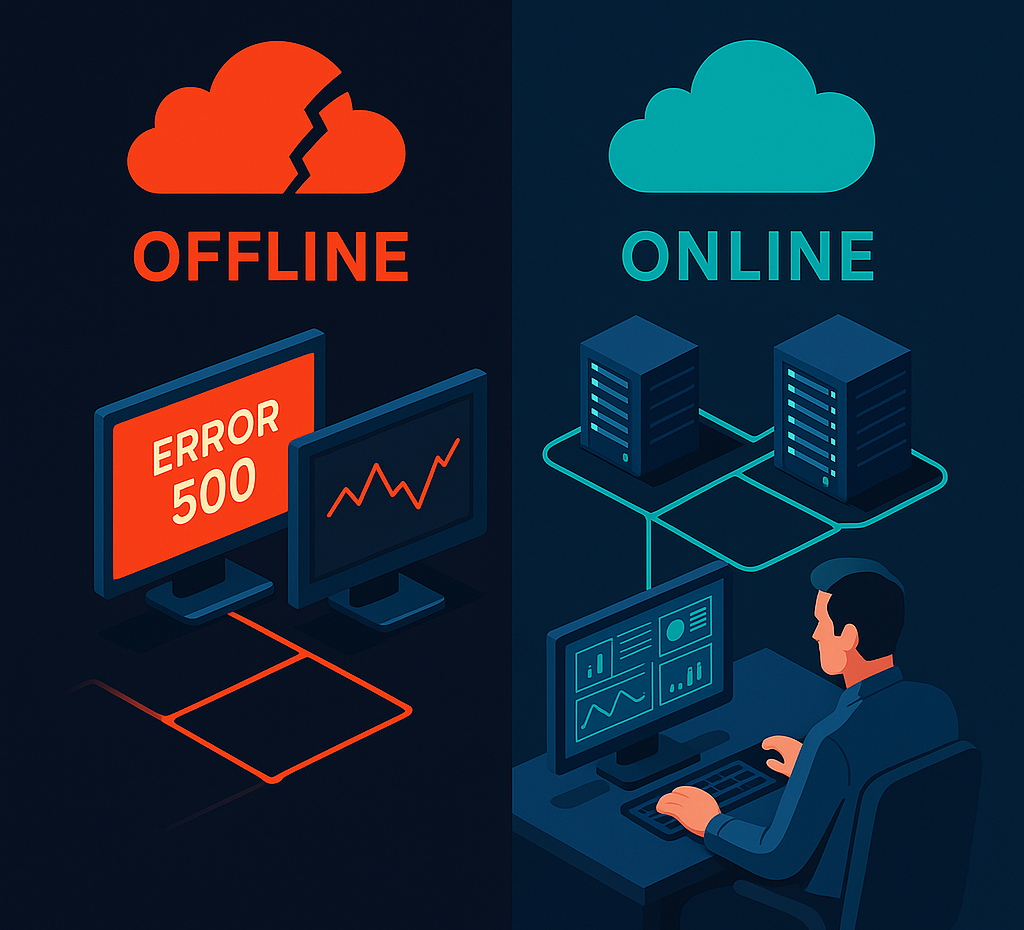

Cloudflare pushed a change that created an oversized internal configuration file. That file propagated across their global network and started to break core services. Requests were reaching Cloudflare but many of them ended with 5xx errors.

From the outside the internet looked “down”.

In reality one key infrastructure layer had a bad day, and everything that fully depended on that layer went dark with it.

If your business lives behind Cloudflare and you had no alternative path ready, you were out. No matter how nice your app is. No matter how much you pay for ads.

What we saw from inside

We monitor our stack and our clients very aggressively.

First signs were simple.

Error rate up. Success rate down. Response times crazy. Alerts firing from different regions at once.

Within minutes it was clear this was not a single app or single data center.

It was an infrastructure provider.

That is the moment when most teams open X, see “Cloudflare is down”, swear and start improvising.

We did something else.

We opened the runbook for “CDN or edge provider outage”.

Traffic started to move.

DNS changes. Routing changes. Health checks validated that the new path worked.

Thirty minutes after the first alerts our clients were fully reachable on alternative routes.

No hacks. No lucky guess.

Just work we had done earlier when things were calm.

Why so many businesses lost money

Here is the pattern I keep seeing when we audit infrastructures.

New project.

Team picks a popular edge provider. Adds WAF, CDN, DNS, rate limiting, maybe email and workers on top. Connects everything directly to it. Puts all traffic through one choke point.

The deck says “global redundant network”.

Reality says “single vendor”.

When that vendor fails you do not have a Plan B.

You have a logo on a slide and a vague idea that “they have SLAs”.

The brutal truth?

Resilience you did not design will never magically appear when you need it.

What a real Plan B looks like

Plan B is not a slogan.

Plan B is a set of very boring capabilities that you test in production.

Here is what worked for us.

1. DNS that is under your control

You must be able to move critical domains away from any provider in minutes.

- Clear map of which domains are business critical

- Time to live values low enough for emergency changes on those records

- Documented procedure for switching DNS to another provider or directing it straight to origin

DNS is your steering wheel.

If you cannot turn it fast you are a passenger.

2. Alternative paths around Cloudflare

For some clients we send traffic through another CDN.

For others we have direct paths to origin with strict firewalls and whitelists.

Important part is simple.

The path exists and is already tested. You do not want to be writing firewall rules when half the internet is screaming.

3. Monitoring that tells you the real story

We do not only monitor our own apps.

We watch external dependencies from multiple locations.

If Cloudflare, a payment provider or an email API starts to fail we see it as separate signals. That lets us say “this is not our code, this is upstream” and switch strategy fast.

4. Runbooks and ownership

Every known incident type in your stack should have three things.

- When to trigger the procedure

- Step by step actions

- Who owns the decision

When Cloudflare broke we did not hold a philosophy discussion.

We followed the playbook we had already argued about months ago.

5. Regular chaos drills

You only trust a backup path that has seen real traffic.

So from time to time we deliberately shift a part of traffic to alternative routes. Limited window. Clear metrics.

If something breaks we fix it and update the runbook.

That way when the big outage hits, the “new” path is not new at all.

A 30 minute audit you can run with your team

You do not need a huge project to start.

Sit down with your tech lead and walk through this checklist.

Step 1. Map revenue to systems

List the flows that directly equal money.

- Sign up and purchase

- Lead forms and demo requests

- Payments and deposits

- Trading or booking sessions

For each flow write which providers are involved. CDN, DNS, auth, payments, email, SMS, hosting.

Step 2. Circle the single points of failure

If one vendor going offline kills the entire flow, mark it in red.

This is not only Cloudflare.

Could be Stripe. Could be your mail provider that handles password resets. Could be a regional hosting provider.

Step 3. Decide the maximum acceptable downtime

Be honest.

For most serious businesses the real answer for key flows is less than one hour. For trading, bookings and medical platforms it is often minutes.

Anything longer is just money burning.

Step 4. Design at least one alternative path

For every red circle write the simplest version of Plan B.

- Second CDN or WAF ready to take traffic

- Direct path to origin for internal users

- Backup payment provider

- Static degraded pages hosted outside your main stack

It does not need to be perfect on day one.

It needs to exist.

Step 5. Put it into a runbook and assign a name

Every Plan B must have an owner.

Someone who is allowed to push the big red button when metrics go crazy.

If nobody is responsible then during the outage everyone will wait for permission and watch dashboards while revenue dies.

What yesterday changed

Before this outage many teams could tell themselves a story.

“Our provider is huge. They know what they are doing. Outages happen to smaller players.”

Yesterday that story died.

One configuration change in one large network took down a long list of giants and a huge number of smaller businesses.

The question now is simple.

Will you treat it as bad luck or as free training data.

How we can help

We run a digital agency with a heavy technical core.

We sit between business, marketing and infrastructure. We build websites, AI automation and integration for teams that cannot afford to be offline.

We already stress tested our stack and our processes in a real global outage.

We know how it behaves when the internet itself is on fire.

If you want someone with battle data to look at your setup, reach out.

Ask your team one question.

If Cloudflare goes down tomorrow, how long until we are back online.

If the room goes quiet, that is your real incident.